AI that partners with people to solve real problems.

From population-scale medical imaging and patient data to engineering design and simulation — CAD, CFD, FEA, and control theory — and even music and art, this is AI that learns structure across disciplines and organizes it into a unified graph. It's designed not to replace expertise, but to amplify it — diagnosing faster, revealing latent patterns across masses of data, enabling discovery, and accelerating science, engineering, and creativity.

What We're Working On

-

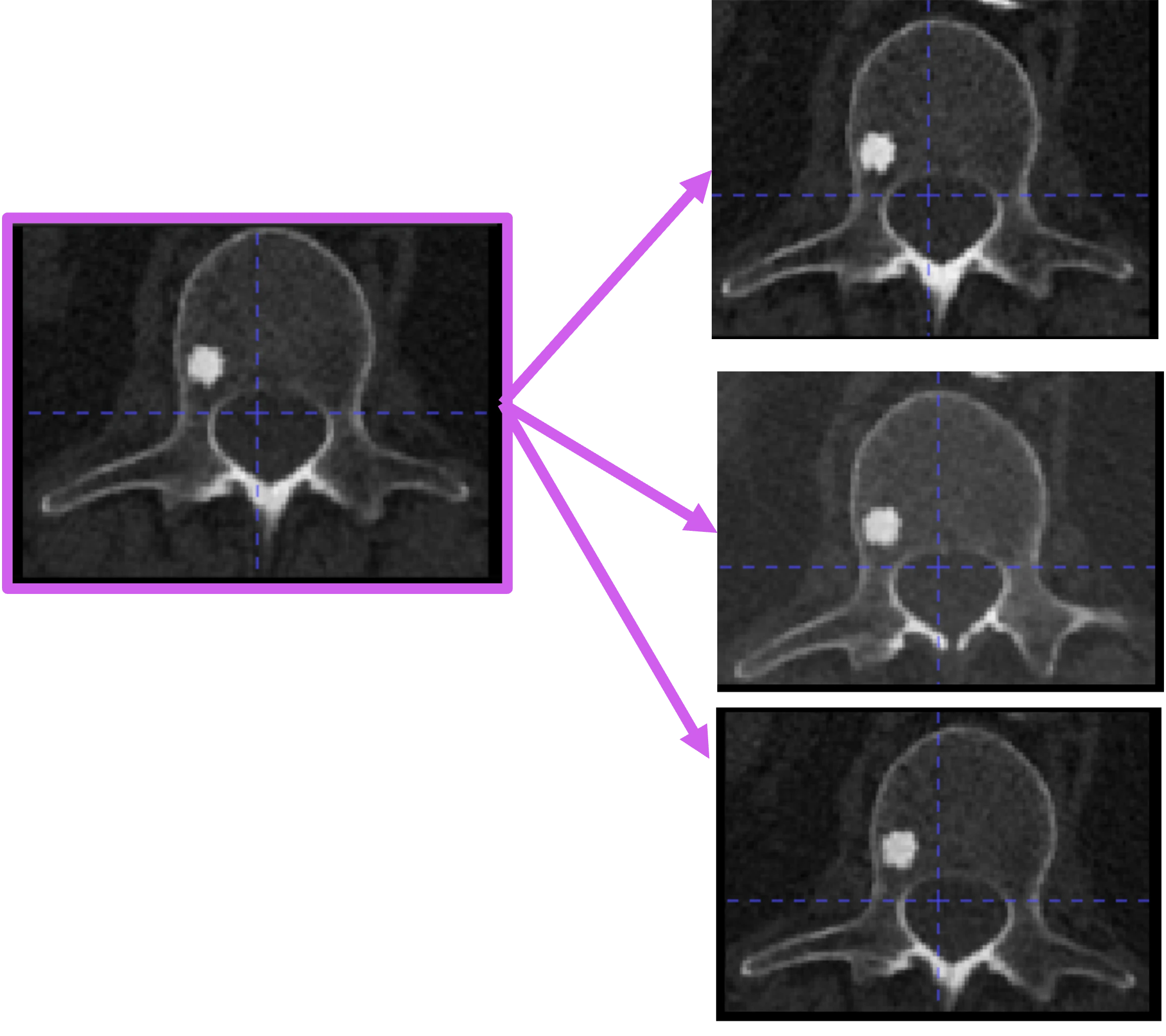

Learning 3D Medical Images with No Labels:

Learning 3D Medical Images with No Labels:

No labels. Unsupervised. 91% top-1 Nearest Neighbour accuracy over 130 unbalanced categories and 300k medical images (MRI, CT, mammogram). -

Searching Medical Images for a Needle in a Haystack:

Searching Medical Images for a Needle in a Haystack:

Search so accurate, it can find an individual patient amongst hundreds of thousands using any of their organ images as a search query. -

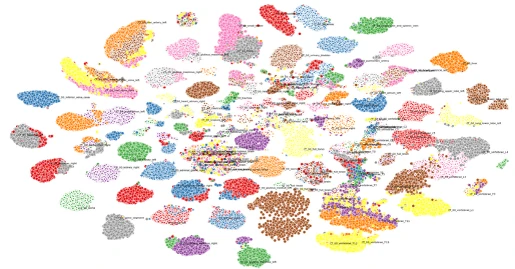

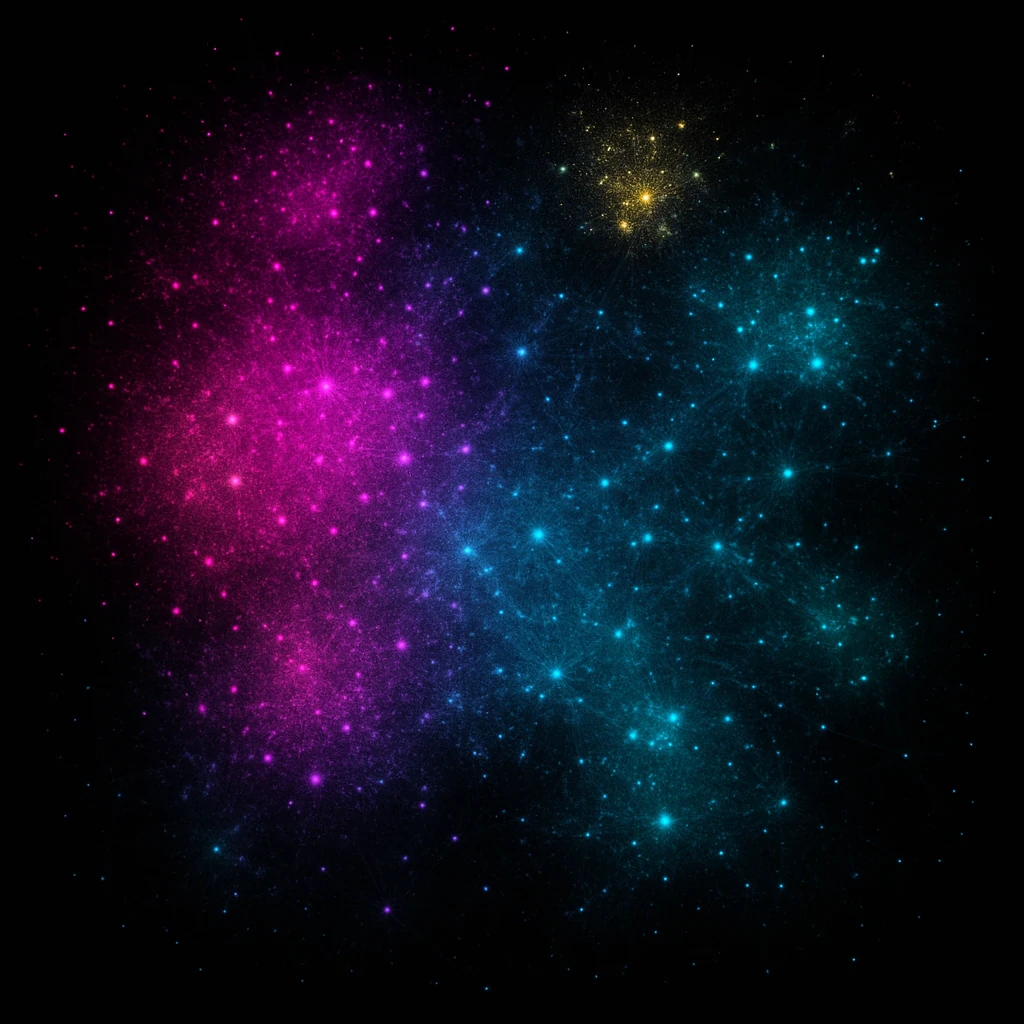

Self-Forming Organ Graphs and Topology:

Self-Forming Organ Graphs and Topology:

Learned organ networks with scale-free, small-world structure — no supervision needed. Social networks and neighbourhoods built with organs. -

The 19th Century Language Model (WIP):

The 19th Century Language Model (WIP):

A small, contemptable LLM that insults you with impeccable diction. Training in progress from scratch on the best of the 18th and 19th century. Rust + Burn + WASM + WebGPU. Runs on the edge - i.e. your phone. -

All-Modality World Model (WIP):

All-Modality World Model (WIP):

One model for medicine, text, images, engineering, CAD, FEA, CFD, control theory, music, art, and more. A generative foundation model for real understanding. Training in progress. -

3D Segmentation Without Noisy Skip Connections:

3D Segmentation Without Noisy Skip Connections:

Skip connections are noisy. We can get rid of them and still get 94% DICE on the TotalSegmentator dataset. -

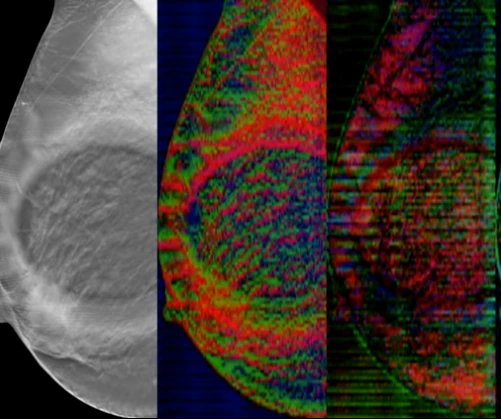

Explainable AI - See What the AI Sees

Explainable AI - See What the AI Sees

AI doesn't have to be a black box. It's possible to see what it cares about. This works best with huge amounts of data and self-supervised learning.

Why This Matters

At first glance, the projects above may seem unrelated. But connections always exist, even if they’re not immediately visible. Take control theory, for example—mechanical systems can be modeled with electrical analogs: a mass becomes a grounded capacitor, a spring becomes an inductor. Pressure maps to voltage, fluid flow to current—the math aligns.

For AI to uncover insights we ourselves might miss, it must be exposed to a broad and diverse range of experiences. Medicine, engineering, art, music, literature—even fashion—may appear incongruous, but their intersections hold deep potential. When we visualize these relationships as graphs, structure begins to emerge—hubs, bridges, clusters—revealing meaning that isn't obvious at the surface.

Our core thesis is simple: these connections exist, and we aim to find them—and graph them. And this is the purpose of our world model that is under development. Emergence will arises. Structure will form. The connections will be found. Each of the above projects is part of our overall world model effort. Stay tuned...